简介

Cloudflare Workers AI 允许你在 Cloudflare 的全球网络上使用无服务器 GPU 运行机器学习模型。你可以通过 Workers、Pages 或 Cloudflare API 将这些模型集成到自己的代码中。该平台支持各种 AI 任务,包括图像分类、文本生成和物体检测。

主要特点:

- 模型: 精选的多种开源模型用于不同的 AI 任务。

- 计费: 从 2024 年 4 月 1 日起,非测试模型的使用将开始计费。

- 资源: 可访问相关产品如 Vectorize、R2、D1 等。

点击 访问官方大模型广场

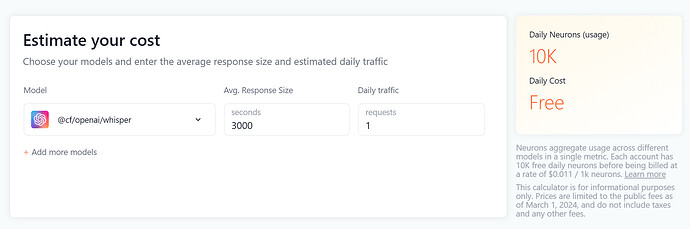

计费

免费计划 每天 有 10000 牛额度(牛是 cf 的AI货币单位,可使用官方计算器计算),10000牛 可用来:

- 100-200 次对话

- 500 次翻译

- 500 秒语音转文字

- 10,000 个文本分类

- 1,500-15,000 个嵌入

自 2024 年 4 月 1 日 起,以下模型每天超过 10000牛 后,超出的部分按照 0.011刀/ 千牛 计费

- bge-small-en-v1.5

- bge-base-en-v1.5

- bge-large-en-v1.5

- distilbert-sst-2-int8

- llama-2-7b-chat-int8

- llama-2-7b-chat-fp16

- mistral-7b-instruct-v0.1

- m2m100-1.2b

- resnet-50

- whisper

可在 cf 面板 AI 标签查看额度使用情况,具体计费标准请参考:Pricing · Cloudflare Workers AI docs

使用限制

自动语音识别

- 每分钟 720 次请求

图像分类

- 每分钟 3000 次请求

图像转文本

- 每分钟 720 次请求

目标检测

- 每分钟 3000 次请求

摘要生成

- 每分钟 1500 次请求

文本分类

- 每分钟 2000 次请求

文本嵌入

- 每分钟 3000 次请求

@cf/baai/bge-large-en-v1.5每分钟 1500 次请求

文本生成

- 每分钟 300 次请求

@hf/thebloke/mistral-7b-instruct-v0.1-awq每分钟 400 次请求@cf/microsoft/phi-2每分钟 720 次请求@cf/qwen/qwen1.5-0.5b-chat每分钟 1500 次请求@cf/qwen/qwen1.5-1.8b-chat每分钟 720 次请求@cf/qwen/qwen1.5-14b-chat-awq每分钟 150 次请求@cf/tinyllama/tinyllama-1.1b-chat-v1.0每分钟 720 次请求

文本转图像

- 每分钟 720 次请求

@cf/runwayml/stable-diffusion-v1-5-img2img每分钟 1500 次请求

翻译

- 每分钟 720 次请求

简单上手

开始之前,需要您先自行注册账号并登录

获取 AccountID

打开 面板页 ,地址栏的最后一个 / 后的字符串即你的 AccountID

获取令牌

打开 令牌页 创建令牌,注意选择 Workers AI

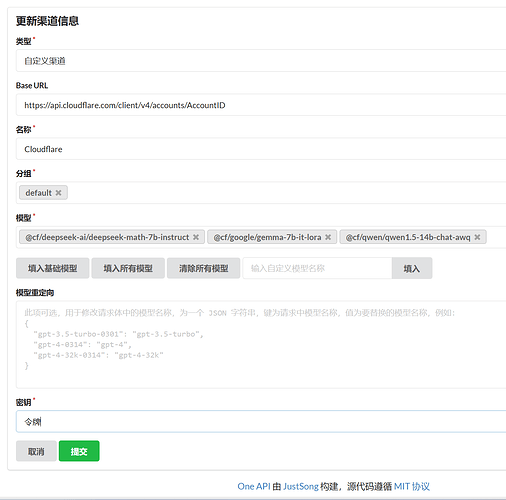

OneAPI 设置

对话模型

key: 获取的令牌

base_url:https://api.cloudflare.com/client/v4/accounts/AccountID/ai,替换AccountID

model:

- @cf/deepseek-ai/deepseek-math-7b-instruct

- @cf/defog/sqlcoder-7b-2

- @cf/fblgit/una-cybertron-7b-v2-awq

- @cf/fblgit/una-cybertron-7b-v2-bf16

- @cf/google/gemma-2b-it-lora

- @cf/google/gemma-7b-it-lora

- @cf/meta-llama/llama-2-7b-chat-hf-lora

- @cf/meta/llama-2-7b-chat-fp16

- @cf/meta/llama-2-7b-chat-int8

- @cf/meta/llama-3-8b-instruct

- @cf/meta/llama-3-8b-instruct-awq

- @cf/microsoft/phi-2

- @cf/mistral/mistral-7b-instruct-v0.1

- @cf/mistral/mistral-7b-instruct-v0.1-vllm

- @cf/mistral/mistral-7b-instruct-v0.2-lora

- @cf/openchat/openchat-3.5-0106

- @cf/qwen/qwen1.5-0.5b-chat

- @cf/qwen/qwen1.5-1.8b-chat

- @cf/qwen/qwen1.5-14b-chat-awq

- @cf/qwen/qwen1.5-7b-chat-awq

- @cf/thebloke/discolm-german-7b-v1-awq

- @cf/tiiuae/falcon-7b-instruct

- @cf/tinyllama/tinyllama-1.1b-chat-v1.0

- @hf/google/gemma-7b-it

- @hf/mistral/mistral-7b-instruct-v0.2

- @hf/nexusflow/starling-lm-7b-beta

- @hf/nousresearch/hermes-2-pro-mistral-7b

- @hf/thebloke/codellama-7b-instruct-awq

- @hf/thebloke/deepseek-coder-6.7b-base-awq

- @hf/thebloke/deepseek-coder-6.7b-instruct-awq

- @hf/thebloke/llama-2-13b-chat-awq

- @hf/thebloke/llamaguard-7b-awq

- @hf/thebloke/mistral-7b-instruct-v0.1-awq

- @hf/thebloke/neural-chat-7b-v3-1-awq

- @hf/thebloke/openhermes-2.5-mistral-7b-awq

- @hf/thebloke/zephyr-7b-beta-awq

POST 示例:

curl --request POST \

--url https://api.cloudflare.com/client/v4/accounts/${AccountID}//ai/v1/chat/completions \

--header 'Authorization: Bearer 令牌' \

--header 'Content-Type: application/json' \

--data '

{

"model": "@cf/meta/llama-3-8b-instruct",

"messages": [

{

"role": "user",

"content": "how to build a wooden spoon in 3 short steps? give as short as answer as possible"

}

]

}

'

嵌入模型

除模型不同外与对话完全一样,可以和对话放在一个渠道里

key: 获取的令牌

base_url:https://api.cloudflare.com/client/v4/accounts/AccountID/ai,替换AccountID

model:

- @cf/baai/bge-base-en-v1.5

- @cf/baai/bge-large-en-v1.5

- @cf/baai/bge-small-en-v1.5

文生图模型

key: 获取的令牌

base_url:你的worker地址,默认地址被墙必须定义路由

model:

- @cf/bytedance/stable-diffusion-xl-lightning

- @cf/lykon/dreamshaper-8-lcm

- @cf/runwayml/stable-diffusion-v1-5-img2img

- @cf/runwayml/stable-diffusion-v1-5-inpainting

- @cf/stabilityai/stable-diffusion-xl-base-1.0

worker.js

注意替换 AccountID

addEventListener('fetch', event => {

event.respondWith(handleRequest(event.request));

});

async function handleRequest(request) {

if (request.method === "OPTIONS") {

return new Response("", {

headers: {

'Access-Control-Allow-Origin': '*',

"Access-Control-Allow-Headers": '*'

}, status: 204

});

}

if (/^(https?:\/\/[^\/]*?)\/file\//i.test(request.url)) {

if (request.headers.get("if-modified-since")) {

return new Response("", { status: 304, headers: {

'Access-Control-Allow-Origin': '*',

"Access-Control-Allow-Headers": '*',

"Last-Modified": request.headers.get("If-Modified-Since")

}});

}

const img = await fetch(request.url.replace(/^(https?:\/\/[^\/]*?)\//, "https://telegra.ph/"));

return new Response(img.body, { status: img.status, headers: {

"content-type": img.headers.get("content-type"),

'Access-Control-Allow-Origin': '*',

"Access-Control-Allow-Headers": '*',

"Last-Modified": (new Date()).toUTCString(),

"Cache-Control": "public, max-age=31536000"

}});

}

const url = new URL(request.url);

const search = url.searchParams;

if (!search.get("debug")) {

if (url.pathname !== "/v1/chat/completions" || request.method !== "POST") {

return new Response("Not Found or Method Not Allowed", {

status: 404,

headers: {

"Content-Type": "application/json",

'Access-Control-Allow-Origin': '*',

"Access-Control-Allow-Headers": '*'

}

});

}

}

const authHeader = request.headers.get("Authorization") || "Bearer " + search.get("key");

if (!authHeader || !authHeader.startsWith("Bearer ")) {

return new Response("Unauthorized: Missing or invalid Authorization header", {

status: 401,

headers: {

"Content-Type": "application/json",

'Access-Control-Allow-Origin': '*',

"Access-Control-Allow-Headers": '*'

}

});

}

const apiKey = authHeader.slice(7);

let data;

try {

data = await request.json();

} catch (error) {

if (!search.get("debug")) return new Response("Bad Request: Invalid JSON", { status: 400 });

data = { model: search.get("model") || "@cf/stabilityai/stable-diffusion-xl-base-1.0", messages: [{ role: "user", content: search.get("prompt") || "cat" }] };

}

if (!data || !data.model || !data.messages || data.messages.length === 0) {

return new Response("Bad Request: Missing required fields", { status: 400 });

}

const prompt = data.messages[data.messages.length - 1].content;

const cloudflareUrl = `https://api.cloudflare.com/client/v4/AccountID/ai/run/${data.model}`;

const requestBody = JSON.stringify({

prompt: prompt,

num_inference_steps: 20,

guidance_scale: 7.5,

strength: 1

});

const currentTimestamp = Math.floor(Date.now() / 1000);

const uniqueId = `imggen-${currentTimestamp}`;

try {

const apiResponse = await fetch(cloudflareUrl, {

method: 'POST',

headers: {

'Authorization': authHeader,

'Content-Type': 'application/json',

},

body: requestBody,

});

if (!apiResponse.ok) {

throw new Error("Request error: " + apiResponse.status);

}

const imageBlob = await apiResponse.blob();

const formData = new FormData();

formData.append("file", imageBlob, "image.jpg");

const uploadResponse = await fetch("https://telegra.ph/upload", {

method: 'POST',

body: formData,

});

if (!uploadResponse.ok) {

throw new Error("Failed to upload image");

}

const uploadResult = await uploadResponse.json();

const imageUrl = request.url.match(/^(https?:\/\/[^\/]*?)\//)[1] + uploadResult[0].src;

const responsePayload = {

id: uniqueId,

object: "chat.completion.chunk",

created: currentTimestamp,

model: data.model,

choices: [

{

index: 0,

delta: {

content: ``,

},

finish_reason: "stop",

},

],

};

const dataString = JSON.stringify(responsePayload);

return new Response(`data: ${dataString}\n\n`, {

status: 200,

headers: {

"Content-Type": "text/event-stream",

'Access-Control-Allow-Origin': '*',

"Access-Control-Allow-Headers": '*',

},

});

} catch (error) {

return new Response("Internal Server Error: " + error.message, {

status: 500,

headers: {

"Content-Type": "application/json",

'Access-Control-Allow-Origin': '*',

"Access-Control-Allow-Headers": '*',

},

});

}

}

音转文模型

key: 获取的令牌

base_url:你的worker地址

model:

- @cf/openai/whisper

- @cf/openai/whisper-sherpa

- @cf/openai/whisper-tiny-en

POST 示例:

注意替换 domain.com 为你的worker地址

curl -X POST https://domain.com/v1/audio/transcriptions \

-H "Authorization: Bearer 令牌" \

-F file=@C:\Users\Folders\audio.mp3 \

-F model="@cf/openai/whisper"

worker.js

注意替换AccountID

addEventListener('fetch', event => {

event.respondWith(handleRequest(event.request))

})

async function handleRequest(request) {

const url = new URL(request.url)

const { pathname } = url

if (request.method === 'POST' && pathname === '/v1/audio/transcriptions') {

const formData = await request.formData()

const file = formData.get('file')

const model = formData.get('model')

if (!file || !model) {

return new Response('File or model not provided', { status: 400 })

}

const apiUrl = `https://api.cloudflare.com/client/v4/accounts/AccountID/ai/run/${model}`

const apiResponse = await fetch(apiUrl, {

method: 'POST',

headers: {

'Authorization': request.headers.get('Authorization'),

'Content-Type': 'application/octet-stream'

},

body: file.stream()

})

const apiResult = await apiResponse.json()

const textResult = apiResult.result.text

const formattedResult = JSON.stringify({ text: textResult })

return new Response(formattedResult, {

headers: { 'Content-Type': 'application/json' }

})

} else {

return new Response('Not Found', { status: 404 })

}

}

翻译模型

model:

- @cf/meta/m2m100-1.2b

支持的语言

source_lang 与 target_lang 范围一致

- 英语 (en)

- 中文 (zh)

- 法语 (fr)

- 德语 (de)

- 西班牙语 (es)

- 意大利语 (it)

- 日语 (ja)

- 韩语 (ko)

- 葡萄牙语 (pt)

- 俄语 (ru)

- 荷兰语 (nl)

- 瑞典语 (sv)

- 挪威语 (no)

- 丹麦语 (da)

- 芬兰语 (fi)

- 波兰语 (pl)

- 土耳其语 (tr)

- 阿拉伯语 (ar)

- 希伯来语 (he)

- 印度尼西亚语 (id)

- 泰语 (th)

- 越南语 (vi)

- 印地语 (hi)

- 马来语 (ms)

- 希腊语 (el)

- 捷克语 (cs)

- 斯洛伐克语 (sk)

- 罗马尼亚语 (ro)

- 匈牙利语 (hu)

- 保加利亚语 (bg)

- 克罗地亚语 (hr)

- 塞尔维亚语 (sr)

- 乌克兰语 (uk)

POST 示例:

curl --request POST \

--url https://api.cloudflare.com/client/v4/accounts/account_id/ai/run/${model}\

--header 'Authorization: Bearer 令牌' \

--header 'Content-Type: application/json' \

--data '{

"source_lang": "en",

"target_lang": "zh",

"text": "I love you."

}'

沉浸式翻译

沉浸式翻译打开开发者设置中的Beta,然后选择 DeepLX(Beta) 地址输入:https://你的worker地址/translate?password=${authKey}

worker.js

addEventListener('fetch', event => event.respondWith(handleRequest(event.request)));

const model = '@cf/meta/m2m100-1.2b';

const authKey = 'YOUR_PASSWORD';

const accountId = 'YOUR_ACCOUNT_ID';

const token = 'YOUR_TOKEN';

async function handleRequest(request) {

const url = new URL(request.url);

if (request.method === 'OPTIONS') return new Response(null, { status: 204, headers: { 'Access-Control-Allow-Origin': '*', 'Access-Control-Allow-Methods': 'POST, OPTIONS', 'Access-Control-Allow-Headers': 'Content-Type, Authorization' } });

if (request.method !== 'POST' || url.pathname !== '/translate' || url.searchParams.get('password') !== authKey) return new Response(request.method !== 'POST' || url.pathname !== '/translate' ? 'Not Found' : 'Unauthorized', { status: request.method !== 'POST' || url.pathname !== '/translate' ? 404 : 401 });

const data = await request.json();

if (!data.text || !data.source_lang || !data.target_lang) return new Response('Bad Request', { status: 400 });

const cloudflareUrl = `https://api.cloudflare.com/client/v4/accounts/${accountId}/ai/run/${model}`;

const init = {

method: 'POST',

headers: { 'Authorization': `Bearer ${token}`, 'Content-Type': 'application/json' },

body: JSON.stringify({ source_lang: data.source_lang.toLowerCase(), target_lang: data.target_lang.toLowerCase(), text: data.text })

};

try {

const response = await fetch(cloudflareUrl, init);

const responseData = await response.json();

return new Response(JSON.stringify({

alternatives: [], code: 200, data: responseData.result.translated_text, id: Math.floor(Math.random() * 10000000000), source_lang: data.source_lang, target_lang: data.target_lang

}), { headers: { 'Content-Type': 'application/json' } });

} catch (error) {

console.error('Translation failed:', error);

return new Response(JSON.stringify({ error: 'Translation failed' }), { headers: { 'Content-Type': 'application/json' }, status: 500 });

}

}