今天,全球首个在代码、数学能力上与GPT-4-Turbo争锋的模型,DeepSeek-Coder-V2,正式上线和开源。

Silicon上能用吗

挺强的,略微体验了一下确实逻辑能力不错

多少钱啊

这么牛

Ollama估计过几天就上线了,现在还没有coder版本

之前没出二代时候,始皇就推荐用deepseek-coder做代码补全

卧槽,太香了

这次是甚至可以替代chat了,而非只能补全

这个coder也是236b啊 ![]()

尝试在三级区白嫖的英特尔试试?不过不支持cuda,不知道能部署不,还不如直接买官方的

api 版本上下文还是 32k,期待上新 128k 版本

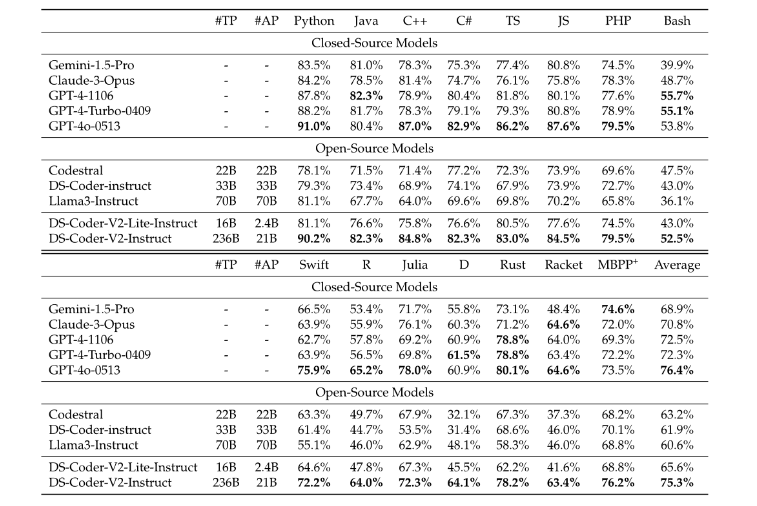

This performance is notable as it breaks the dominance typically seen from closed-source models, standing out as a leading open-source contender. It is surpassed only by GPT-4o, which leads with an average score of 76.4%. DeepSeek-Coder-V2-Instruct shows top-tier results across a variety of languages, including the highest scores in Java and PHP, and strong performances in Python, C++, C#, TypeScript, and JavaScript, underscoring its robustness and versatility in handling diverse coding challenges.

期待deepseek-math也更新!

这次的coder-v2使用的训练方法与deepseek-math是相同的,可以说是math的整合版

To collect code-related and math-related web texts from Common Crawl, we follow the same pipeline as DeepSeekMath(Shao et al., 2024).

谢谢!!!

数学能力是相当不错的,虽然只有10%的数学语料

The pre-training data for DeepSeek-Coder-V2 primarily consists of 60% source code, 10% math corpus, and 30% natural language corpus.

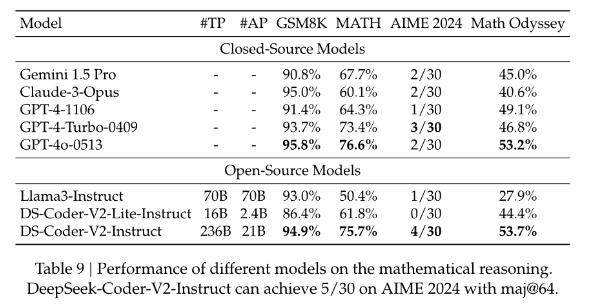

The results, presented in Table 9, were obtained using greedy decoding without the aid of tools or voting techniques, unless otherwise specified. DeepSeek-Coder-V2 achieved an accuracy of 75.7% on the MATH benchmark and 53.7% on Math Odyssey, comparable to the state-of-the-art GPT-4o. Additionally, DeepSeek-Coder-V2 solves more problems from AIME2024 than the other models, demonstrating its strong mathematical reasoning capabilities.

可以看看deepseek会不会用v2架构更新math模型

太好了,高考题启动!

可能拿高考题训练了hhhh

支持开源!