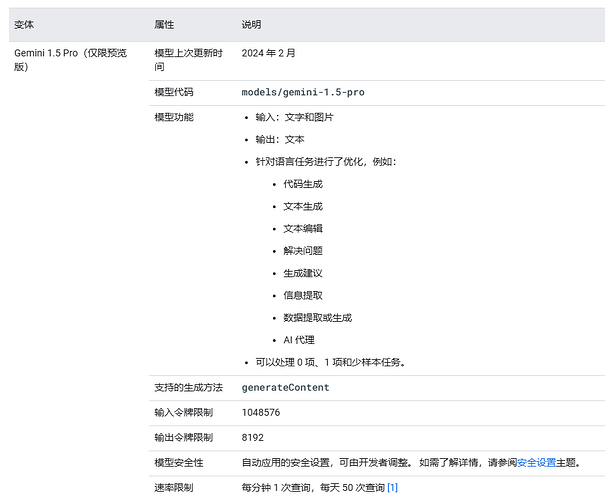

刚刚关注了Google AI studio官网发现models中已经出现了gemini-1.5-pro模型的相关信息:

亮点在于速率限制一栏中,预计开放之后每天可以免费调用50次。

模型特色:

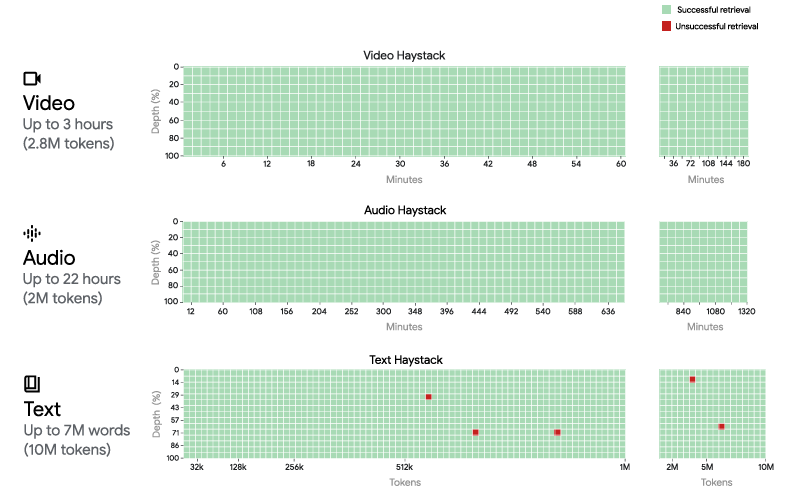

超长的上下文窗口(10M,也就是比最近火热的kimi还要长5倍!),以及卓越的多模态能力(文本、图片、视频)。

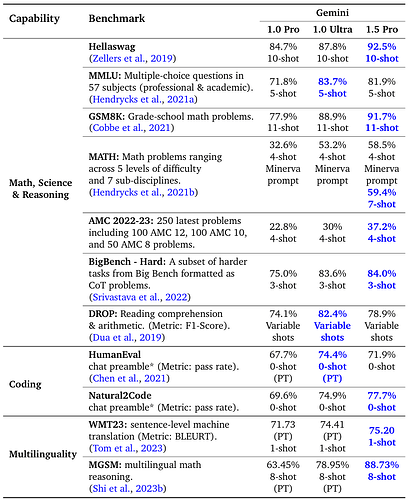

模型性能:

与超大杯(Ultra)在知识问答、推理、coding、多语言上互有胜负,也就是接近GPT-4级别的文本能力:

模型开放情况:

经过使用之前已经通过gemini-1.5-pro前期试用的账户中开通的API对v1beta接口查看模型列表,如下所示并没有发现gemini-1.5-pro的身影:

root@iZt4n9bibw8mk6t2jkkm7hZ:~# curl https://generativelanguage.googleapis.com/v1beta/models?key=XXXX

{

"models": [

{

"name": "models/chat-bison-001",

"version": "001",

"displayName": "PaLM 2 Chat (Legacy)",

"description": "A legacy text-only model optimized for chat conversations",

"inputTokenLimit": 4096,

"outputTokenLimit": 1024,

"supportedGenerationMethods": [

"generateMessage",

"countMessageTokens"

],

"temperature": 0.25,

"topP": 0.95,

"topK": 40

},

{

"name": "models/text-bison-001",

"version": "001",

"displayName": "PaLM 2 (Legacy)",

"description": "A legacy model that understands text and generates text as an output",

"inputTokenLimit": 8196,

"outputTokenLimit": 1024,

"supportedGenerationMethods": [

"generateText",

"countTextTokens",

"createTunedTextModel"

],

"temperature": 0.7,

"topP": 0.95,

"topK": 40

},

{

"name": "models/embedding-gecko-001",

"version": "001",

"displayName": "Embedding Gecko",

"description": "Obtain a distributed representation of a text.",

"inputTokenLimit": 1024,

"outputTokenLimit": 1,

"supportedGenerationMethods": [

"embedText",

"countTextTokens"

]

},

{

"name": "models/gemini-1.0-pro",

"version": "001",

"displayName": "Gemini 1.0 Pro",

"description": "The best model for scaling across a wide range of tasks",

"inputTokenLimit": 30720,

"outputTokenLimit": 2048,

"supportedGenerationMethods": [

"generateContent",

"countTokens"

],

"temperature": 0.9,

"topP": 1,

"topK": 1

},

{

"name": "models/gemini-1.0-pro-001",

"version": "001",

"displayName": "Gemini 1.0 Pro 001 (Tuning)",

"description": "The best model for scaling across a wide range of tasks. This is a stable model that supports tuning.",

"inputTokenLimit": 30720,

"outputTokenLimit": 2048,

"supportedGenerationMethods": [

"generateContent",

"countTokens",

"createTunedModel"

],

"temperature": 0.9,

"topP": 1,

"topK": 1

},

{

"name": "models/gemini-1.0-pro-latest",

"version": "001",

"displayName": "Gemini 1.0 Pro Latest",

"description": "The best model for scaling across a wide range of tasks. This is the latest model.",

"inputTokenLimit": 30720,

"outputTokenLimit": 2048,

"supportedGenerationMethods": [

"generateContent",

"countTokens"

],

"temperature": 0.9,

"topP": 1,

"topK": 1

},

{

"name": "models/gemini-1.0-pro-vision-latest",

"version": "001",

"displayName": "Gemini 1.0 Pro Vision",

"description": "The best image understanding model to handle a broad range of applications",

"inputTokenLimit": 12288,

"outputTokenLimit": 4096,

"supportedGenerationMethods": [

"generateContent",

"countTokens"

],

"temperature": 0.4,

"topP": 1,

"topK": 32

},

{

"name": "models/gemini-pro",

"version": "001",

"displayName": "Gemini 1.0 Pro",

"description": "The best model for scaling across a wide range of tasks",

"inputTokenLimit": 30720,

"outputTokenLimit": 2048,

"supportedGenerationMethods": [

"generateContent",

"countTokens"

],

"temperature": 0.9,

"topP": 1,

"topK": 1

},

{

"name": "models/gemini-pro-vision",

"version": "001",

"displayName": "Gemini 1.0 Pro Vision",

"description": "The best image understanding model to handle a broad range of applications",

"inputTokenLimit": 12288,

"outputTokenLimit": 4096,

"supportedGenerationMethods": [

"generateContent",

"countTokens"

],

"temperature": 0.4,

"topP": 1,

"topK": 32

},

{

"name": "models/embedding-001",

"version": "001",

"displayName": "Embedding 001",

"description": "Obtain a distributed representation of a text.",

"inputTokenLimit": 2048,

"outputTokenLimit": 1,

"supportedGenerationMethods": [

"embedContent"

]

},

{

"name": "models/aqa",

"version": "001",

"displayName": "Model that performs Attributed Question Answering.",

"description": "Model trained to return answers to questions that are grounded in provided sources, along with estimating answerable probability.",

"inputTokenLimit": 7168,

"outputTokenLimit": 1024,

"supportedGenerationMethods": [

"generateAnswer"

],

"temperature": 0.2,

"topP": 1,

"topK": 40

}

]

}

让子弹再飞一会儿!