Refrence:

ps . uv 官方真的太贴心了,这是喂饭级的,把各种cpu,cu11,cu12,intel gpu…的基础配置都写出来了。

我印象里面看到poetry用户在安装的时候考虑用指定whl路径的方法,但是存在很大局限性,因为 wh l是锁死 python 版本和系统的。

Installing a specific PyTorch build (f/e CPU-only) with Poetry

问题描述:

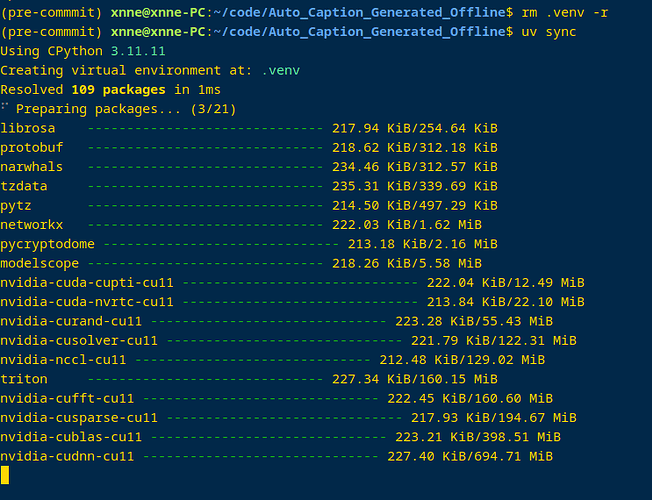

正常情况下,我们使用 uv add torch==2.1.0时,安装的是 cpu+cuda 版本的 torch:

xnne@xnne-PC:~/code/Auto_Caption_Generated_Offline$ uv add torch==2.1.0 torchaudio==2.1.0

⠴ nvidia-cusparse-cu12==12.1.0.106 ^C

To start, consider the following (default) configuration, which would be generated by running uv init --python 3.12 followed by uv add torch torchvision.

首先,请考虑以下(默认)配置,运行 uv init --python 3.12 后再运行 uv add torch torchvision 即可生成该配置。

In this case, PyTorch would be installed from PyPI, which hosts CPU-only wheels for Windows and macOS, and GPU-accelerated wheels on Linux (targeting CUDA 12.4):

在这种情况下,可以从 PyPI 安装 PyTorch,PyTorch 在 Windows 和 macOS 上只支持 CPU 驱动轮,在 Linux 上支持 GPU 加速驱动轮(针对 CUDA 12.4):

[project]

name = "project"

version = "0.1.0"

requires-python = ">=3.12"

dependencies = [

"torch>=2.6.0",

"torchvision>=0.21.0",

]

这有时候并不是我们想要的,比如我的 deepin 上面没有装 nvidia 的驱动,那么我下了3~4G的环境我压根用不到。

我尝试用uv add torch==2.1.0+cpu -f https://download.pytorch.org/whl/torch_stable.html

xnne@xnne-PC:~/code/Auto_Caption_Generated_Offline$ uv add torch==2.1.0+cpu -f https://download.pytorch.org/whl/torch_stable.html

Resolved 63 packages in 10.37s

Built auto-caption-generate-offline @ fil

⠹ Preparing packages... (4/5)

torch ------------------------------ 19.06 MiB/176.29 MiB

它确实下载到了 cpu 版本的torch,但是,在我尝试从我的远程仓库安装时,我碰到了问题:

xnne@xnne-PC:~/code/test/Auto_Caption_Generated_Offline$ uv pip install git+https://github.com/MrXnneHang/[email protected]

Updated https://github.com/MrXnneHang/Auto_Caption_Generated_Offline (12065e01ec1dc11f8f224fbb132cfd1c18ec3ac1)

× No solution found when resolving dependencies:

╰─▶ Because there is no version of torch==2.1.0+cpu and auto-caption-generate-offline==2.4.0 depends on torch==2.1.0+cpu, we can conclude that auto-caption-generate-offline==2.4.0 cannot be used.

And because only auto-caption-generate-offline==2.4.0 is available and you require auto-caption-generate-offline, we can conclude that your requirements are unsatisfiable.

原因:

原因是 pytorch 上传的镜像并不在 pypi 的 index 上。

From a packaging perspective, PyTorch has a few uncommon characteristics:

从包装的角度来看,PyTorch 有几个不同寻常的特点:

Many PyTorch wheels are hosted on a dedicated index, rather than the Python Package Index (PyPI). As such, installing PyTorch often requires configuring a project to use the PyTorch index.

许多 PyTorch 轮子托管在专用索引上,而不是 Python 包索引 (PyPI)。因此,安装 PyTorch 通常需要将项目配置为使用 PyTorch 索引。

PyTorch produces distinct builds for each accelerator (e.g., CPU-only, CUDA). Since there's no standardized mechanism for specifying these accelerators when publishing or installing, PyTorch encodes them in the local version specifier. As such, PyTorch versions will often look like 2.5.1+cpu, 2.5.1+cu121, etc.

PyTorch 会为每种加速器(如纯 CPU、CUDA)生成不同的编译版本。由于在发布或安装时没有指定这些加速器的标准化机制,PyTorch 将它们编码在本地版本说明符中。因此,PyTorch 版本通常看起来像 2.5.1+cpu , 2.5.1+cu121 等。

Builds for different accelerators are published to different indexes. For example, the +cpu builds are published on https://download.pytorch.org/whl/cpu, while the +cu121 builds are published on https://download.pytorch.org/whl/cu121.

不同加速器的编译会发布到不同的索引中。例如, +cpu 版本发布在 https://download.pytorch.org/whl/cpu 上,而 +cu121 版本发布在 https://download.pytorch.org/whl/cu121 上。

解决:

最后我自己敲定的相关配置是这样的:

dependencies = [

"funasr==1.2.4",

"pyaml==25.1.0",

"torch==2.1.0",

"torchaudio==2.1.0",

]

[[tool.uv.index]]

name = "pytorch-cpu"

url = "https://download.pytorch.org/whl/cpu"

explicit = true

[tool.uv.sources]

torch = [

{ index = "pytorch-cpu" },

]

torchaudio = [

{ index = "pytorch-cpu" },

]

之后我们 uv lock, 然后 push 上去。

ps: 如果在配置cuda版本的时候,我们应该考虑使用previous版本的cuda,比如使用11.8.而不是使用最新的12.x甚至13.x因为用户的驱动不会一直是最新的,而新的驱动是兼容旧的 cu 版本的。除非在性能上有非常高的提升,但是一般来说是没有太大区别的。

从 github 上安装:

最终成功安装了=-=。

xnne@xnne-PC:~/code/test/Auto_Caption_Generated_Offline$ uv venv -p 3.10 --seed

Using CPython 3.10.16

Creating virtual environment with seed packages at: .venv

+ pip==25.0.1

+ setuptools==75.8.2

+ wheel==0.45.1

xnne@xnne-PC:~/code/test/Auto_Caption_Generated_Offline$ uv pip install git+https://github.com/MrXnneHang/[email protected]

Resolved 63 packages in 5.85s

Prepared 2 packages in 11m 45s

...

+ torch==2.1.0+cpu

+ torch-complex==0.4.4

+ torchaudio==2.1.0+cpu

+ tqdm==4.67.1

...

...

不过在运行的时候 torch 的 numpy 和 funasr 的 numpy 出现了一点冲突:

xnne@xnne-PC:~/code/test/Auto_Caption_Generated_Offline$ uv run test-ACGO

Built auto-caption-generate-offline @ file:///home/xnne/code/test/Auto_Caption_Generated_Offline

Uninstalled 1 package in 0.57ms

Installed 1 package in 0.95ms

A module that was compiled using NumPy 1.x cannot be run in

NumPy 2.1.3 as it may crash. To support both 1.x and 2.x

versions of NumPy, modules must be compiled with NumPy 2.0.

Some module may need to rebuild instead e.g. with 'pybind11>=2.12'.

If you are a user of the module, the easiest solution will be to

downgrade to 'numpy<2' or try to upgrade the affected module.

We expect that some modules will need time to support NumPy 2.

Traceback (most recent call last): File "/home/xnne/code/test/Auto_Caption_Generated_Offline/.venv/bin/test-ACGO", line 4, in <module>

from uiya.test import main

File "/home/xnne/code/test/Auto_Caption_Generated_Offline/src/uiya/test.py", line 1, in <module>

import funasr

手动降级到1.26.4后解决。

uv add numpy==1.26.4

uv lock

xnne@xnne-PC:~/code/test$ uv venv -p 3.10 --seed

Using CPython 3.10.16

Creating virtual environment with seed packages at: .venv

+ pip==25.0.1

+ setuptools==75.8.2

+ wheel==0.45.1

Activate with: source .venv/bin/activate

xnne@xnne-PC:~/code/test$ uv pip install git+https://github.com/MrXnneHang/[email protected]

Resolved 63 packages in 7.90s

Prepared 2 packages in 603ms

Installed 63 packages in 259ms

+ aliyun-python-sdk-core==2.16.0

+ aliyun-python-sdk-kms==2.16.5

+ antlr4-python3-runtime==4.9.3

+ audioread==3.0.1

+ auto-caption-generate-offline==2.4.0 (from git+https://github.com/MrXnneHang/Auto_Caption_Generated_Offline@5f03a04ebdbe4b7a1329302b551e58092e8af9ee)

...

+ torch==2.1.0+cpu

+ torch-complex==0.4.4

+ torchaudio==2.1.0+cpu

+ tqdm==4.67.1

...

xnne@xnne-PC:~/code/test$ uv run test-ACGO

funasr:1.2.4

torch:2.1.0+cpu

torchaudio:2.1.0+cpu

乌龙

中间还有一个小乌龙。就是我在项目目录下,运行uv pip install → uv run,而我目录下存在pyproject.toml和uv.lock,那么运行的肯定不是我安装的那个,而是我根据我项目中 pyproject.toml 生成的版本。于是乎我也就陷入了一个没有更新代码,uv run一直报原来的bug的问题。git pull 后解决。

所以,如果git pull了,就不用uv pip install git+了。直接uv run即可。

如果之后 cuda 版本我遇到问题可能会再次补充。