不知道aipro api能白嫖的走这里

因为原来的响应体是非流式,所以直接将其模拟为流式就可以让各个前端项目解析json

接下来,我就不多bb了,我直接把代码贴在下面,worker直接爽用即可

注,代码修改于vv佬分享的代码,感谢vv佬

@vux1jpmal5t41lg

addEventListener("fetch", event => {

event.respondWith(handleRequest(event.request))

})

async function handleRequest(request) {

if (request.method === "OPTIONS") {

return new Response(null, {

headers: {

'Access-Control-Allow-Origin': '*',

"Access-Control-Allow-Headers": '*'

}, status: 204

})

}

// 确保请求是 POST 请求,并且路径正确

if (request.method === "POST" && new URL(request.url).pathname === "/v1/chat/completions") {

const url = 'https://multillm.ai-pro.org/api/openai-completion'; // 目标 API 地址

const headers = new Headers(request.headers);

// 添加或修改需要的 headers

headers.set('Content-Type', 'application/json');

// 获取请求的 body 并解析 JSON

const requestBody = await request.json();

const stream = requestBody.stream; // 获取 stream 参数

// 构造新的请求

const newRequest = new Request(url, {

method: 'POST',

headers: headers,

body: JSON.stringify(requestBody) // 使用修改后的 body

});

try {

// 向目标 API 发送请求并获取响应

const response = await fetch(newRequest);

const responseData = await response.json(); // 解析响应数据

// 如果 stream 参数为 true,则使用事件流格式发送响应

if (stream) {

return new Response(eventStream(responseData), {

headers: {

'Access-Control-Allow-Origin': '*',

"Access-Control-Allow-Headers": '*',

'Content-Type': 'text/event-stream',

'Cache-Control': 'no-cache',

'Connection': 'keep-alive'

}

});

} else {

// 如果不是流式响应,则直接返回响应数据

return new Response(JSON.stringify(responseData), {

status: response.status,

headers: response.headers

});

}

} catch (e) {

// 如果请求失败,返回错误信息

return new Response(JSON.stringify({ error: 'Unable to reach the backend API' }), { status: 502 });

}

} else {

// 如果请求方法不是 POST 或路径不正确,返回错误

return new Response('Not found', { status: 404 });

}

}

function eventStream(data) {

// Simplified eventStream function that does not split the content into chunks

return `data: ${JSON.stringify({

id: data.id,

object: 'chat.completion.chunk',

created: data.created,

model: data.model,

system_fingerprint: data.system_fingerprint,

choices: [{

index: 0,

delta: { role: 'assistant', content: data.choices[0].message.content },

logprobs: null,

finish_reason: data.choices[0].finish_reason

}]

})}\n\n`;

}

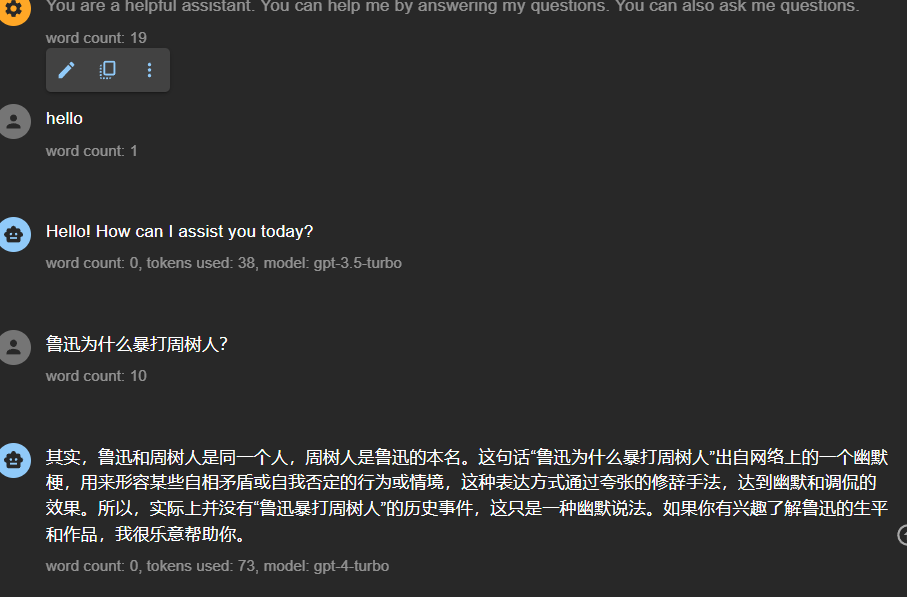

在chatbox中测试通过,其他未尝试,理论都可行

(跟着始皇,我这臭搞嵌入式的也学了几个把事(汗))

66 个赞

bbb

5

试了一下next web用不了,botgem倒是能用

3 个赞

解决了,还简化了一下代码,把头昏脑涨加的没用玩意删了

2 个赞

Yelo

(Kyle)

7

我把worker 地址挂到new api ,然后密钥随便填个123,保存以后用next web 调new api 的令牌就行了

3 个赞

还是感谢vv佬无私的分享  ,老早之前都想知道传说中没key的GPT了

,老早之前都想知道传说中没key的GPT了

2 个赞

edwa

(edwa)

13

感谢!nextchat访问不了应该是cors的问题,在一开始加上

if (request.method === "OPTIONS") {

return new Response(null, {

headers: {

'Access-Control-Allow-Origin': '*',

"Access-Control-Allow-Headers": '*'

}, status: 204

})

}

返回时加上这两个

return new Response(xxx, {

headers: {

'Access-Control-Allow-Origin': '*',

"Access-Control-Allow-Headers": '*',

'Content-Type': 'text/event-stream; charset=UTF-8'

这样改大概可以

4 个赞

是的,刚才看了一下,是这样的,缺少Access-Control-Allow-Origin,感谢,我添加进去

3 个赞

添加了可以自定义密钥  。

。

addEventListener("fetch", event => {

event.respondWith(handleRequest(event.request))

})

async function handleRequest(request) {

// Validate the Authorization header and token

const expectedToken = "sk-"; // 这里填你的密钥

const authHeader = request.headers.get('Authorization');

if (!authHeader || authHeader !== `Bearer ${expectedToken}`) {

// If token is not present or incorrect, return an error

return new Response(JSON.stringify({

error: {

message: "无效的令牌 (request id: 20240423165027522207531CfzwE8fz)",

type: "new_api_error"

}

}), { status: 401 });

}

// 确保请求是 POST 请求,并且路径正确

if (request.method === "POST" && new URL(request.url).pathname === "/v1/chat/completions") {

const url = 'https://multillm.ai-pro.org/api/openai-completion'; // 目标 API 地址

const headers = new Headers(request.headers);

// 添加或修改需要的 headers

headers.set('Content-Type', 'application/json');

// 获取请求的 body 并解析 JSON

const requestBody = await request.json();

const stream = requestBody.stream; // 获取 stream 参数

// 构造新的请求

const newRequest = new Request(url, {

method: 'POST',

headers: headers,

body: JSON.stringify(requestBody) // 使用修改后的 body

});

try {

// 向目标 API 发送请求并获取响应

const response = await fetch(newRequest);

const responseData = await response.json(); // 解析响应数据

// 如果 stream 参数为 true,则使用事件流格式发送响应

if (stream) {

return new Response(eventStream(responseData), {

headers: {

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Headers': '*',

'Content-Type': 'text/event-stream; charset=UTF-8',

'Cache-Control': 'no-cache',

'Connection': 'keep-alive'

}

});

} else {

// 如果不是流式响应,则直接返回响应数据

return new Response(JSON.stringify(responseData), {

status: response.status,

headers: response.headers

});

}

} catch (e) {

// 如果请求失败,返回错误信息

return new Response(JSON.stringify({ error: 'Unable to reach the backend API' }), { status: 502 });

}

} else {

// 如果请求方法不是 POST 或路径不正确,返回错误

return new Response('Not found', { status: 404 });

}

}

// 生成事件流格式数据的函数

function eventStream(data) {

const chunks = data.choices[0].message.content.split(' '); // 将内容分割成单词数组

let events = '';

events += `data: ${JSON.stringify({

id: data.id,

object: 'chat.completion.chunk',

created: data.created,

model: data.model,

system_fingerprint: data.system_fingerprint,

choices: [{

index: 0,

delta: { role: 'assistant', content: '' },

logprobs: null,

finish_reason: null

}]

})}\n\n`;

for (const chunk of chunks) {

events += `data: ${JSON.stringify({

id: data.id,

object: 'chat.completion.chunk',

created: data.created,

model: data.model,

system_fingerprint: data.system_fingerprint,

choices: [{

index: 0,

delta: { content: chunk + " " },

logprobs: null,

finish_reason: null

}]

})}\n\n`;

}

events += `data: ${JSON.stringify({

id: data.id,

object: 'chat.completion.chunk',

created: data.created,

model: data.model,

system_fingerprint: data.system_fingerprint,

choices: [{

index: 0,

delta: {},

logprobs: null,

finish_reason: 'stop'

}]

})}\n\n`;

return events;

}

5 个赞