addEventListener('fetch', event => {

const url = new URL(event.request.url);

if (url.pathname === '/v1/chat/completions' && event.request.method === 'POST') {

event.respondWith(handleStream(event.request));

} else {

event.respondWith(new Response('Not found', { status: 404 }));

}

});

async function handleStream(request) {

const incomingData = await request.json();

const userMessage = incomingData.messages.map(message => `${message.role}: ${message.content}`).join('\n');

const userModel = incomingData.model;

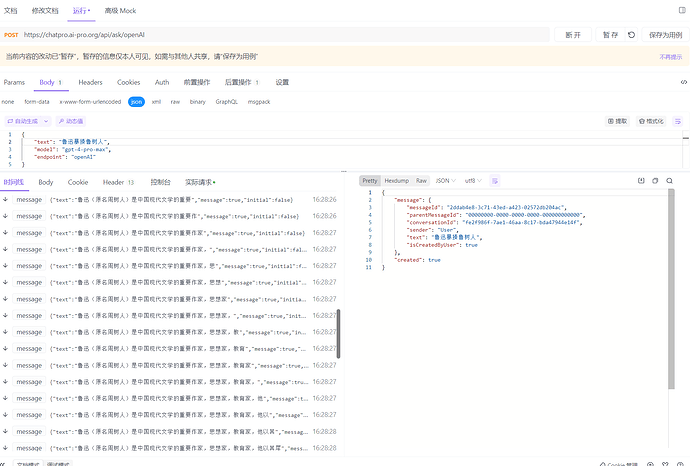

const data = JSON.stringify({

text: userMessage,

endpoint: "openAI",

model: userModel

});

const headers = new Headers({

"Content-Type": "application/json",

"origin": "https://chatpro.ai-pro.org",

"referer":"https://chatpro.ai-pro.org/chat/new",

"user-agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/124.0.0.0 Safari/537.36",

});

const response = await fetch('https://chatpro.ai-pro.org/api/ask/openAI', {

method: 'POST',

headers: headers,

body: data

});

if (response.status !== 200) {

return response;

}

const { readable, writable } = new TransformStream();

const writer = writable.getWriter();

const currentTimestamp = Math.floor(Date.now() / 1000);

if (incomingData.stream === false || incomingData.stream == null) {

const reader = response.body.getReader();

let buffer = '';

let lastMessage = '';

let responseData;

while (true) {

const { done, value } = await reader.read();

if (done) break;

buffer += new TextDecoder("utf-8").decode(value);

let position;

while ((position = buffer.indexOf('\n')) !== -1) {

const part = buffer.substring(0, position).trim();

buffer = buffer.substring(position + 1);

if (part.startsWith('event: message')) {

try {

lastMessage = buffer.substring(5).trim();

const t = JSON.parse(lastMessage);

responseData = t;

} catch(e) {}

}

}

}

const modifiedData = {

id: `chatcmpl-${currentTimestamp}`,

object: "chat.completion",

created: currentTimestamp,

model: userModel,

choices: [{

index: 0,

message: {

role: "assistant",

content: responseData.text || responseData.error

},

logprobs: null,

finish_reason: "stop"

}],

usage: {

prompt_tokens: 0,

completion_tokens: 0,

total_tokens: 0

},

system_fingerprint: null

};

return new Response(JSON.stringify(modifiedData), {

headers: {

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Headers': '*',

'Content-Type': 'application/json; charset=UTF-8'

},

status: 200

});

}

const reader = response.body.getReader();

let buffer = '';

let lastLineWasEvent = false;

let finishReason = null;

let lastText = "";

async function push() {

const { done, value } = await reader.read();

if (done) {

await writer.close();

return;

}

buffer += new TextDecoder("utf-8").decode(value);

let position;

while ((position = buffer.indexOf('\n')) !== -1) {

let line = buffer.substring(0, position).trim();

buffer = buffer.substring(position + 1);

if (line.startsWith('event: message')) {

lastLineWasEvent = true;

} else if (lastLineWasEvent && line.startsWith('data: ')) {

const dataContent = JSON.parse(line.substring(5));

const text = dataContent.text || "";

const textDifference = text.replace(lastText, '');

lastText = text;

if (textDifference === "") {

finishReason = "stop";

} else {

finishReason = null;

}

const modifiedData = {

id: `chatcmpl-${currentTimestamp}`,

created: currentTimestamp,

object: "chat.completion.chunk",

model: userModel,

choices: [{

delta: { content: textDifference },

index: 0,

finish_reason: finishReason

}]

};

const dataString = JSON.stringify(modifiedData);

await writer.write(new TextEncoder().encode(`data: ${dataString}\n\n`));

lastLineWasEvent = false;

} else {

lastLineWasEvent = false;

}

}

push();

}

push();

return new Response(readable, {

headers: {

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Headers': '*',

'Content-Type': 'text/event-stream; charset=UTF-8'

},

status: response.status

});

}

支持模型

gpt-3.5-turbo

gpt-4-turbo

gpt-4-turbo-2024-04-09

gpt-4-1106-preview

curl --request POST \

--url https://ai-pro.zhc.ink/v1/chat/completions \

--header 'Content-Type: application/json' \

--data '{

"messages": [

{

"role": "user",

"content": "1个孕妇加1个男人?"

},

{

"role": "assistant",

"content": "等于三个人"

},

{

"role": "user",

"content": "所以说1个孕妇加1个男人等于几个人"

}

],

"stream": false,

"model": "gpt-4-turbo"

}'