NVIDIA 官方自产自销,新注册用户赠送 1k 积分

简单上手

注册账户

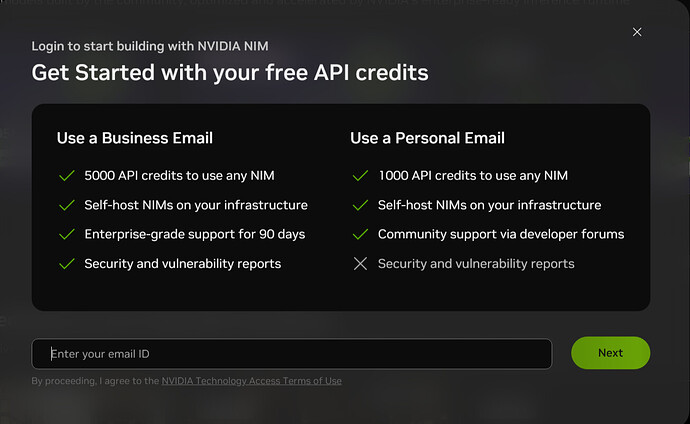

- 访问 NVIDIA官网,然后右上角点击 Login,在弹窗输入邮箱后点击 Next

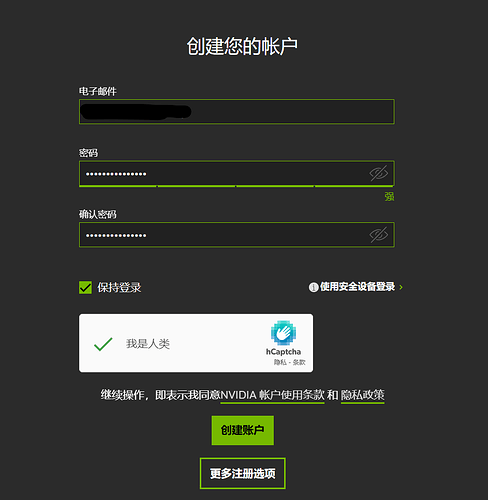

- 输入密码并确认密码以及人类身份后点击 创建账户

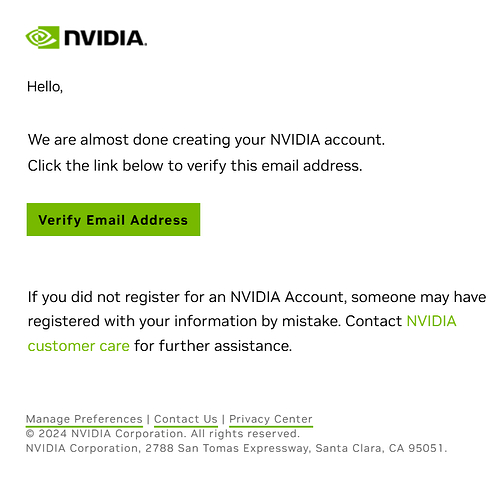

- 登录邮箱查看新邮件,点击 Verify Email Address 验证

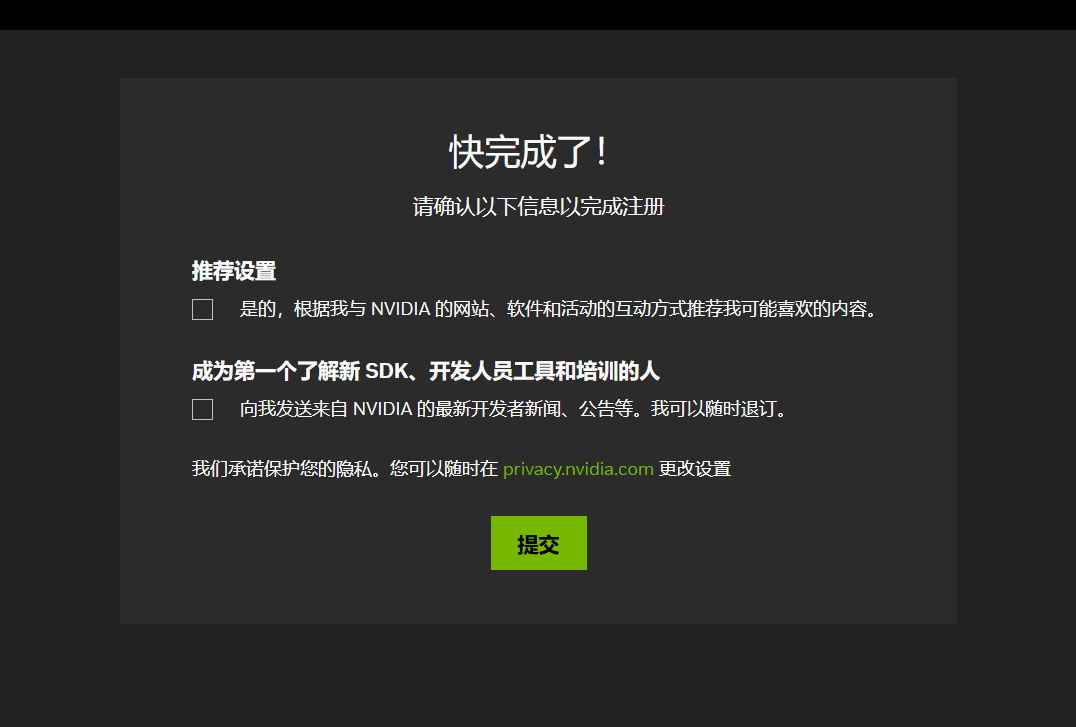

- 重复步骤1,点击提交

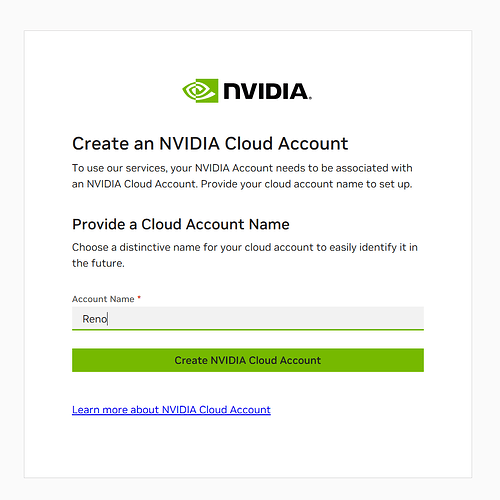

- 输入用户名,点击 Create NVIDIA Cloud Account

- 新注册用户赠送 1k 积分,右上角依次点击 头像 - Request More,填写企业信息可以获得 5k 积分

获取密钥

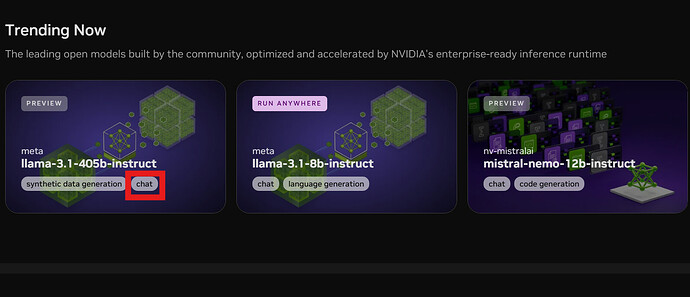

- 点击 Chat

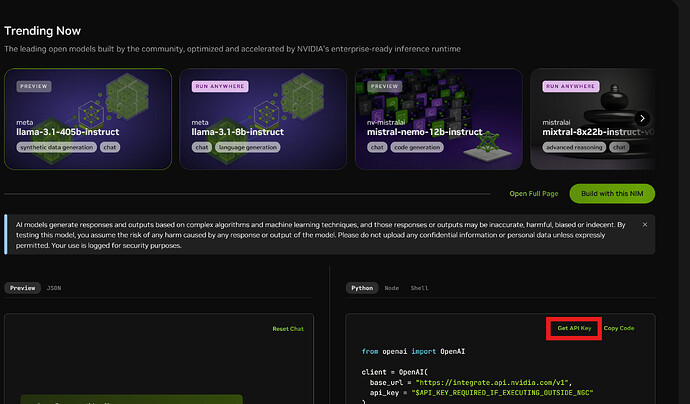

- 依次点击 Get API Key - Generate Key - Copy Key 复制 Key

OneAPI 配置

对话模型

- baseurl: https://integrate.api.nvidia.com

- key: 复制得到的Key

- model: yi-large, sea-lion-7b-instruct, starcoder2-7b, starcoder2-15b, dbrx-instruct, deepseek-coder-6.7b-instruct, gemma-7b, gemma-2b, gemma-2-9b-it, gemma-2-27b-it, codegemma-1.1-7b, codegemma-7b, recurrentgemma-2b, granite-34b-code-instruct, granite-8b-code-instruct, breeze-7b-instruct, codellama-70b, llama2-70b, llama3-8b, llama3-70b, llama-3.1-8b-instruct, llama-3.1-70b-instruct, llama-3.1-405b-instruct, phi-3-medium-128k-instruct, phi-3-medium-4k-instruct, phi-3-mini-128k-instruct, phi-3-mini-4k-instruct, phi-3-small-128k-instruct, phi-3-small-8k-instruct, codestral-22b-instruct-v0.1, mamba-codestral-7b-v0.1, mistral-7b-instruct, mistral-7b-instruct-v0.3, mixtral-8x7b-instruct, mixtral-8x22b-instruct, mistral-large, mistral-nemo-12b-instruct, llama3-chatqa-1.5-70b, llama3-chatqa-1.5-8b, nemotron-4-340b-instruct, nemotron-4-340b-reward, usdcode-llama3-70b-instruct, seallm-7b-v2.5, arctic, solar-10.7b-instruct

- 模型重定向

{

"yi-large":"01-ai/yi-large",

"sea-lion-7b-instruct":"aisingapore/sea-lion-7b-instruct",

"starcoder2-7b":"bigcode/starcoder2-7b",

"starcoder2-15b":"bigcode/starcoder2-15b",

"dbrx-instruct":"databricks/dbrx-instruct",

"deepseek-coder-6.7b-instruct":"deepseek-ai/deepseek-coder-6.7b-instruct",

"gemma-7b":"google/gemma-7b",

"gemma-2b":"google/gemma-2b",

"gemma-2-9b-it":"google/gemma-2-9b-it",

"gemma-2-27b-it":"google/gemma-2-27b-it",

"codegemma-1.1-7b":"google/codegemma-1.1-7b",

"codegemma-7b":"google/codegemma-7b",

"recurrentgemma-2b":"google/recurrentgemma-2b",

"granite-34b-code-instruct":"ibm/granite-34b-code-instruct",

"granite-8b-code-instruct":"ibm/granite-8b-code-instruct",

"breeze-7b-instruct":"mediatek/breeze-7b-instruct",

"codellama-70b":"meta/codellama-70b",

"llama2-70b":"meta/llama2-70b",

"llama3-8b":"meta/llama3-8b",

"llama3-70b":"meta/llama3-70b",

"llama-3.1-8b-instruct":"meta/llama-3.1-8b-instruct",

"llama-3.1-70b-instruct":"meta/llama-3.1-70b-instruct",

"llama-3.1-405b-instruct":"meta/llama-3.1-405b-instruct",

"phi-3-medium-128k-instruct":"microsoft/phi-3-medium-128k-instruct",

"phi-3-medium-4k-instruct":"microsoft/phi-3-medium-4k-instruct",

"phi-3-mini-128k-instruct":"microsoft/phi-3-mini-128k-instruct",

"phi-3-mini-4k-instruct":"microsoft/phi-3-mini-4k-instruct",

"phi-3-small-128k-instruct":"microsoft/phi-3-small-128k-instruct",

"phi-3-small-8k-instruct":"microsoft/phi-3-small-8k-instruct",

"codestral-22b-instruct-v0.1":"mistralai/codestral-22b-instruct-v0.1",

"mamba-codestral-7b-v0.1":"mistralai/mamba-codestral-7b-v0.1",

"mistral-7b-instruct":"mistralai/mistral-7b-instruct",

"mistral-7b-instruct-v0.3":"mistralai/mistral-7b-instruct-v0.3",

"mixtral-8x7b-instruct":"mistralai/mixtral-8x7b-instruct",

"mixtral-8x22b-instruct":"mistralai/mixtral-8x22b-instruct",

"mistral-large":"mistralai/mistral-large",

"mistral-nemo-12b-instruct":"nv-mistralai/mistral-nemo-12b-instruct",

"llama3-chatqa-1.5-70b":"nvidia/llama3-chatqa-1.5-70b",

"llama3-chatqa-1.5-8b":"nvidia/llama3-chatqa-1.5-8b",

"nemotron-4-340b-instruct":"nvidia/nemotron-4-340b-instruct",

"nemotron-4-340b-reward":"nvidia/nemotron-4-340b-reward",

"usdcode-llama3-70b-instruct":"nvidia/usdcode-llama3-70b-instruct",

"seallm-7b-v2.5":"seallms/seallm-7b-v2.5",

"arctic":"snowflake/arctic",

"solar-10.7b-instruct":"upstage/solar-10.7b-instruct"

}

绘图模型

- baseurl: 你的Worker地址

- key: 复制得到的Key

- model:

sdxl-lightning,stable-diffusion-3-medium,stable-diffusion-xl,sdxl-turbo - 模型重定向

{

"sdxl-lightning":"bytedance/sdxl-lightning",

"stable-diffusion-3-medium":"stabilityai/stable-diffusion-3-medium",

"stable-diffusion-xl":"stabilityai/stable-diffusion-xl",

"sdxl-turbo":"stabilityai/sdxl-turbo"

}

worker.js

const NVIDIA_BASE_URL = "https://ai.api.nvidia.com/v1/genai/";

addEventListener('fetch', event => {

console.log('Received a fetch event');

try {

event.respondWith(handleRequest(event.request));

} catch (error) {

console.error('Top-level error handling', error);

event.respondWith(handleError(error, 'Top-level error handling'));

}

});

async function handleRequest(request) {

console.log('Handling request:', request);

const url = new URL(request.url);

const path = url.pathname;

// 路由表

const routeMap = {

'OPTIONS': handleCORS,

'POST': {

'/v1/chat/completions': handleChatCompletions

},

'GET': {

'/file/': handleFileProxy

}

};

const methodRoutes = routeMap[request.method];

if (methodRoutes) {

if (typeof methodRoutes === 'function') {

console.log('Matching route found for method:', request.method);

return await methodRoutes(request);

} else {

const matchingPath = Object.keys(methodRoutes).find(route => path.startsWith(route));

if (matchingPath) {

console.log('Matching path found:', matchingPath);

return await methodRoutes[matchingPath](request, path);

}

}

}

// 默认 404 响应

console.log('No matching route found. Returning 404.');

return new Response('Not Found', { status: 404 });

}

function handleCORS() {

console.log('Handling CORS request');

const headers = {

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Headers': '*',

'Access-Control-Allow-Methods': 'GET, POST, OPTIONS'

};

return new Response(null, { headers });

}

async function handleFileProxy(request, path) {

console.log('Handling file proxy request for path:', path);

const imageUrl = `https://telegra.ph${path}`;

const ifModifiedSince = request.headers.get('if-modified-since');

const response = await fetch(imageUrl, {

headers: {

'If-Modified-Since': ifModifiedSince

}

});

if (response.status === 304) {

console.log('File not modified. Returning 304.');

return new Response(null, {

status: 304,

headers: {

'Last-Modified': ifModifiedSince,

'Cache-Control': 'public, max-age=31536000'

}

});

}

const headers = new Headers(response.headers);

headers.set('Cache-Control', 'public, max-age=31536000');

console.log('File fetched successfully. Returning file response.');

return new Response(response.body, {

status: response.status,

headers: headers

});

}

async function handleChatCompletions(request) {

console.log('Handling chat completions request');

try {

const authHeader = request.headers.get('Authorization');

if (!authHeader?.startsWith('Bearer ')) {

console.log('Unauthorized request');

return new Response("Unauthorized", { status: 401 });

}

const { messages, model } = await request.json();

if (!Array.isArray(messages) || messages.length === 0 || !model) {

console.log('Invalid request format');

return new Response("Invalid request format", { status: 400 });

}

const prompt = messages[messages.length - 1].content;

if (typeof prompt !== 'string') {

console.log('Invalid prompt format');

return new Response("Invalid prompt format", { status: 400 });

}

console.log('Generating images with NVIDIA API');

const imagesBase64 = await generateImagesWithNvidia(prompt, model, authHeader);

if (!Array.isArray(imagesBase64)) {

console.error('Unexpected response format from NVIDIA API:', imagesBase64);

return new Response('Internal Server Error', { status: 500 });

}

console.log('Uploading images to Telegraph');

const uploadPromises = imagesBase64.map(base64 => {

const uint8Array = Uint8Array.from(atob(base64), c => c.charCodeAt(0));

const uploadFormData = new FormData();

uploadFormData.append('file', new Blob([uint8Array], { type: 'image/png' }), 'image.png');

return fetch('https://telegra.ph/upload', {

method: 'POST',

body: uploadFormData

});

});

const uploadResponses = await Promise.all(uploadPromises);

const workerOrigin = request.url.match(/^(https?:\/\/[^\/]*?)\//)[1];

const imagesURLs = await Promise.all(uploadResponses.map(async (response) => {

if (!response.ok) {

console.error('Failed to upload image');

throw new Error('Failed to upload image');

}

const result = await response.json();

return workerOrigin + result[0].src;

}));

const responsePayload = {

id: `imggen-${Math.floor(Date.now() / 1000)}`,

object: 'chat.completion.chunk',

created: Date.now() / 1000,

model: 'imagegeneration@006',

choices: [

{

index: 0,

delta: {

content: imagesURLs.map(url => ``).join('\n\n')

},

finish_reason: 'stop'

}

]

};

console.log('Returning chat completions response');

return new Response(

`data: ${JSON.stringify(responsePayload)}\n\n`,

{

status: 200,

headers: {

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Headers': '*'

}

}

);

} catch (error) {

console.error('Error handling chat completions', error);

return handleError(error, 'Error handling chat completions');

}

}

async function generateImagesWithNvidia(prompt, model, authorization) {

console.log('Generating images with model:', model);

const { url, payload } = getNvidiaRequestDetails(model, prompt);

const response = await fetch(url, {

method: 'POST',

headers: {

'Authorization': authorization,

'Content-Type': 'application/json'

},

body: JSON.stringify(payload)

});

if (!response.ok) {

console.error('NVIDIA API Error:', response.status);

throw new Error(`NVIDIA API Error: ${response.status}`);

}

const data = await response.json();

console.log('Received response from NVIDIA API:', data);

if (Array.isArray(data.artifacts)) {

return data.artifacts.map(artifact => artifact.base64);

} else if (data.image) {

return [data.image];

} else {

throw new Error('Unexpected response format from NVIDIA API');

}

}

function getNvidiaRequestDetails(model, prompt) {

console.log('Getting NVIDIA request details for model:', model);

const configs = {

'bytedance/sdxl-lightning': {

url: `${NVIDIA_BASE_URL}bytedance/sdxl-lightning`,

payload: {

cfg_scale: 0, clip_guidance_preset: "NONE", height: 1024, width: 1024,

sampler: "K_EULER_ANCESTRAL", samples: 1, seed: 0, steps: 4,

style_preset: "none", text_prompts: [{ text: prompt, weight: 1 }]

}

},

'stabilityai/stable-diffusion-3-medium': {

url: `${NVIDIA_BASE_URL}stabilityai/stable-diffusion-3-medium`,

payload: {

aspect_ratio: "1:1", cfg_scale: 5, mode: "text-to-image", model: "sd3",

negative_prompt: "", output_format: "jpeg", prompt, seed: 0, steps: 50

}

},

'stabilityai/sdxl-turbo': {

url: `${NVIDIA_BASE_URL}stabilityai/sdxl-turbo`,

payload: {

height: 512, width: 512, cfg_scale: 0, clip_guidance_preset: "NONE",

sampler: "K_EULER_ANCESTRAL", samples: 1, seed: 0, steps: 4,

style_preset: "none", text_prompts: [{ text: prompt, weight: 1 }]

}

},

'stabilityai/stable-diffusion-xl': {

url: `${NVIDIA_BASE_URL}stabilityai/stable-diffusion-xl`,

payload: {

height: 1024, width: 1024, cfg_scale: 5, clip_guidance_preset: "NONE",

sampler: "K_EULER_ANCESTRAL", samples: 1, seed: 0, steps: 25,

style_preset: "none", text_prompts: [{ text: prompt, weight: 1 }]

}

}

};

const config = configs[model];

if (!config) {

console.error('Unsupported model:', model);

throw new Error(`Unsupported model: ${model}`);

}

return config;

}

async function uploadImageToTelegraph(imageBase64) {

console.log('Uploading image to Telegraph');

const uint8Array = Uint8Array.from(atob(imageBase64), c => c.charCodeAt(0));

const uploadFormData = new FormData();

uploadFormData.append('file', new Blob([uint8Array], { type: 'image/png' }), 'image.png');

const response = await fetch('https://telegra.ph/upload', {

method: 'POST',

body: uploadFormData

});

if (!response.ok) {

console.error('Failed to upload image to Telegraph');

throw new Error('Failed to upload image to Telegraph');

}

const [{ src }] = await response.json();

console.log('Image uploaded to Telegraph. URL:', src);

return src;

}

function handleError(error, context) {

console.error(context, error);

return new Response('Internal Server Error', { status: 500 });

}