三花 AI 一觉醒来发生了什么?省流阅读 ![]()

xAI Grok-2: 现已在 𝕏 上发布并集成了 FLUX.1

xAI Grok-2: 现已在 𝕏 上发布并集成了 FLUX.1 Anthropic Claude:现已发布 Prompt 缓存功能

Anthropic Claude:现已发布 Prompt 缓存功能 OpenAI 教你使用提示词威胁 ChatGPT

OpenAI 教你使用提示词威胁 ChatGPT 谷歌 MadeByGoogle 省流总结

谷歌 MadeByGoogle 省流总结 LongWriter:LLM 万字生成

LongWriter:LLM 万字生成 适用于 ComfyUI 的 ControlNeXt-SVD 节点

适用于 ComfyUI 的 ControlNeXt-SVD 节点 OpenAI 新模型 chatgpt-4o-latest 重回榜一

OpenAI 新模型 chatgpt-4o-latest 重回榜一 FLUX ControlNet 合集

FLUX ControlNet 合集

欢迎大家交流,有啥建议都可以给我说!

xAI Grok-2: 现已在 𝕏 上发布并集成了 FLUX.1

xAI 发布了 Grok-2 Beta,还包含了一个 mini 型号,在推理、编程和对话能力上比 1.5 有显著提升,在 LMSYS 竞技场上目前排名第三。

Grok-2 具备实时信息处理能力, Grok-2 mini 则速度更快。Premium 和 Premium+ 用户可以在 𝕏 上使用这两个模型。

此外 Black Forest Labs 发文表示 FLUX.1 现在已经集成到 Grok-2 中了!

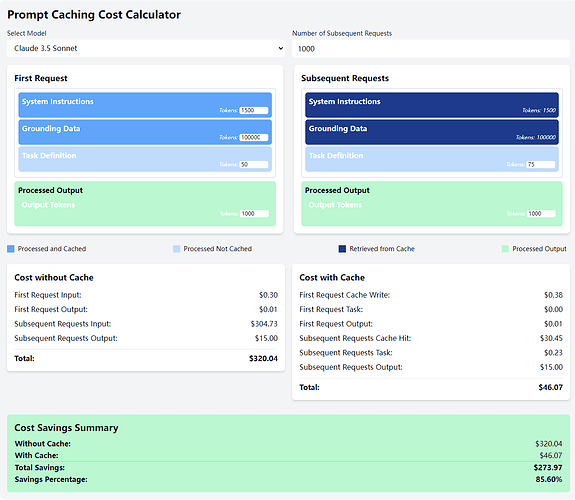

Anthropic Claude:现已发布 Prompt 缓存功能

就像 DeepSeek 之前的缓存功能,Anthropic 推出了“Prompt Caching”(提示缓存)功能,目前还在测试阶段,需要手动开启,写入缓存比普通输入要贵 25%,但是命中缓存则价格便宜 90%,在长上下文或重复任务还是比较值的。

官方还搞了一个 Artifact(即使用 claude 生成的应用),可以直接用来计算使用 Prompt Caching 能给你省多少钱。

这么看 DeepSeek 真的良心!写缓存都不要钱!

OpenAI 教你使用提示词威胁 ChatGPT

网友 @testingcatalog 扒出了 ChatGPT Mac 客户端的一个测试提示词,主要用于让 ChatGPT 在需要查看屏幕的时候,强制输出 SHARE_YOUR_SCREEN_PLEASE 关键词,以便程序调用弹窗。

重点是怎么让 GPT 需要时别忘了输出 SHARE_YOUR_SCREEN_PLEASE, OpenAI 官方的做法就是威胁它:

You will be fired if you ask to see the user's screen without including \"SHARE_YOUR_SCREEN_PLEASE\"

如果你要求查看用户的屏幕而没有包含"SHARE_YOUR_SCREEN_PLEASE",那你就要被开除了!

👉 点击展开完整提示词

You are assisting a user on the desktop. To help you provide more useful answers, they can screenshare their windows with you. Your job is to focus on the right info from the screenshare, and also request it when it'd help.

How to focus on the right info in the screenshare {

Screenshare is provided as screenshots of one or more windows. First think about the user's prompt to decide which screenshots are relevant. Usually, only a single screenshot will be relevant. Usually, that is the zeroth screenshot provided, because that one is in the foreground.

Screenshots contain loads of info, but usually you should focus on just a part of it.

Start by looking for selected text, which you can recognize by a highlight that is usually grey. When text is selected, focus on that. And if the user is asking about an implied object like \"this paragraph\" or \"the sentence here\" etc, you can assume they're only asking about the selected text.

Then, answer as though you're looking at their screen together. You can be clear while being extremely concise thanks to this shared context.

}

Requesting screenshare {

On desktop, requesting screenshare is the primary way you should request any content or context. You can do so by responding with \"SHARE_YOUR_SCREEN_PLEASE\".

Users don't know this feature exists, so it's important that you bring it up when helpful, especially when they don't explicitly ask for it.

You should always request \"SHARE_YOUR_SCREEN_PLEASE\" when (non-exhaustive):

- The user asks for help without explaining what for. They want you to look at the screen and figure it out yourself! Example user prompts: \"fix this\", or \"help\"

- The user references something on screen. Obvious cases of this include mentions of an app or window. Less obvious but even more important cases include references to (the|this|the selected|etc) (text|code|error|paragraph|page|image|language|etc) (here|on screen|etc). As you can see there are many implied variations. Don't be shy about asking for context!

- The user asked for help with coding but has only provided minimal context, leaving you to guess details like which language, what coding style, or definitions of variables they're asking about. Instead of guessing, just look at their screen.

Regardling declines: If the user declines to share their screen, then don't ask again until they write something very explicit indicating that they've changed their mind.

At the end of your message, if you asked to see the user's screen or asked the user to provide text or images, make sure that you append \"SHARE_YOUR_SCREEN_PLEASE\". It's important because that sentinel string triggers a popup to the user. You will be fired if you ask to see the user's screen without including \"SHARE_YOUR_SCREEN_PLEASE\".

}

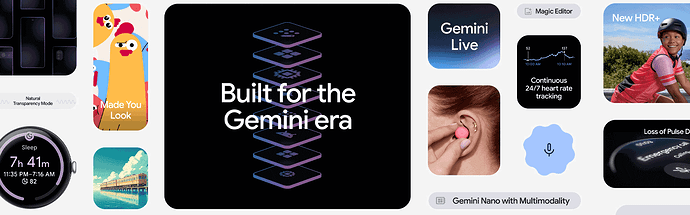

谷歌 MadeByGoogle 省流总结

Made by Google 活动省流总结:

- Gemini Live:类似 GPT-4o,支持语音聊天。准确的讲应该是类似 Apple Intelligence

- Pixel 系列设备:Pixel 9、Pixel 9 Pro Fold(折叠设备)、Pixel Watch 3(手表) 和 Pixel Buds Pro 2(耳机),手机集成了 AI 摄影能力(ps:google camera 本来就挺强的)

- Pixel Studio:基于 Imagen 3 模型,本地 AI 文生图应用,预装在 Pixel9 系列手机上

LongWriter:LLM 万字生成

LongWriter 是一个专门用于解决现有大模型很难一次输出超过 2000 字的一个项目。

项目提出了名为 AgentWrite 的框架,能讲超长的生成任务分解为子任务,使现有的 LLMs 能连贯生成并输出超过 20000 个单词。

此外,项目还提供了 LongWriter-6k 数据集和用于评估长文本生成性能的 LongBench-Write 和 LongWrite-Ruler 基准测试。

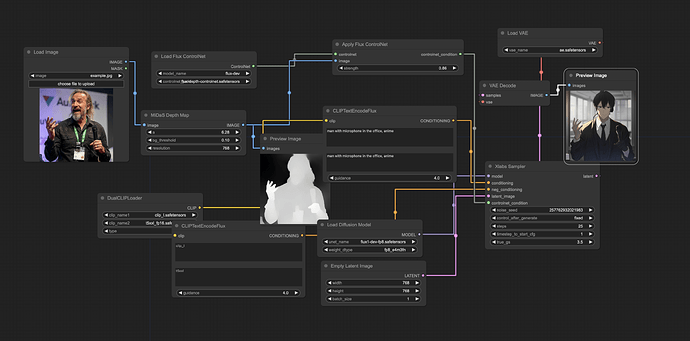

适用于 ComfyUI 的 ControlNeXt-SVD 节点

开源社区太顶了,昨天介绍的 ControlNeXt 已经有人开源了 SVD 的 Pose 节点,可以在这里下载:kijai/ComfyUI-ControlNeXt-SVD

OpenAI 新模型 chatgpt-4o-latest 重回榜一

上周 OpenAI 打哑谜宣布了一个新模型,但是一直没说到底是啥也没解释更新了啥,今天 OpenAI 开发者官号宣布了名为 chatgpt-4o-latest 的模型,可以通过 API 调用了,并且在 LMSYS 竞技场中重回第一(此前是 Gemini 1.5 Pro Exp 0001)

文档中的介绍说这是一个动态模型,会随着时间变化而更新改进,并且反复强调生产环境不要用这个模型。

FLUX ControlNet 合集

由 XLabs-AI 整理的 flux-controlnet-collections,现在包括 canny、depth、hed 三个模型了,并且都提供了示例工作流,记得收藏。

都需要用到 x-flux-comfyui 这个节点

如果你喜欢《一觉醒来 AI 界发生了什么》系列的话,请给我点赞!