"""Translate using deeplpro's dl_session cookie."""

# pylint: disable=broad-exception-caught

import asyncio

import sys

from time import monotonic

from random import randrange

from typing import Union

from concurrent.futures import ThreadPoolExecutor

import httpx

from loadtext import loadtext

COOKIE = "dl_session=81f94b98-497f-4f18-ab79-1ef25076f4c9"

HEADERS = {

"Content-Type": "application/json",

"Cookie": COOKIE,

}

URL = "https://api.deepl.com/jsonrpc"

def deepl_tr(

text: str, source_lang: str = "auto", target_lang: str = ""

) -> Union[dict, Exception]:

"""Translate using deeplpro's dl_session cookie."""

if not source_lang.strip():

source_lang = "auto"

if not target_lang.strip():

target_lang = "zh"

data = {

"jsonrpc": "2.0",

"method": "LMT_handle_texts",

"id": randrange(sys.maxsize),

"params": {

"splitting": "newlines",

"lang": {

"source_lang_user_selected": source_lang,

"target_lang": target_lang,

},

"texts": [

{

"text": text,

"requestAlternatives": 3,

}

],

},

}

try:

_ = httpx.post(URL, json=data, headers=HEADERS)

except Exception as exc:

return exc

try:

jdata = _.json()

except Exception as exc:

return exc

return jdata

async def deepl_tr_async(

text: str, source_lang: str = "auto", target_lang: str = ""

) -> Union[dict, Exception]:

"""Translate using deeplpro's dl_session cookie."""

if not source_lang.strip():

source_lang = "auto"

if not target_lang.strip():

target_lang = "zh"

data = {

"jsonrpc": "2.0",

"method": "LMT_handle_texts",

"id": randrange(sys.maxsize),

"params": {

"splitting": "newlines",

"lang": {

"source_lang_user_selected": source_lang,

"target_lang": target_lang,

},

"texts": [

{

"text": text,

"requestAlternatives": 3,

}

],

},

}

async with httpx.AsyncClient() as client:

try:

_ = await client.post(URL, json=data, headers=HEADERS)

except Exception as exc:

return exc

try:

jdata = _.json()

except Exception as exc:

return exc

return jdata

def main():

"""Run."""

texts = loadtext(r"2024-08-20.txt")

then = monotonic()

# default workers = min(32, (os.cpu_count() or 1) + 4)

# with ThreadPoolExecutor(len(texts)) as executor:

with ThreadPoolExecutor() as executor:

_ = executor.map(deepl_tr, texts)

print(*_)

time_el = monotonic() - then

print(f"{len(texts)}, {time_el:.2f} {time_el / len(texts):.2f}")

async def main_a():

"""Run async."""

texts = loadtext(r"2024-08-20.txt")

then = monotonic()

coros = [deepl_tr_async(text) for text in texts]

_ = await asyncio.gather(*coros)

print(_)

time_el = monotonic() - then

print(f"{len(texts)}, {time_el:.2f} {time_el / len(texts):.2f}")

if __name__ == "__main__":

asyncio.run(main_a())

main()

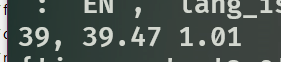

用一个39段的英文测试,所需时间如下:

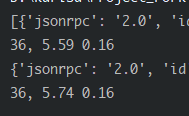

异步:

![]()

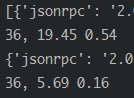

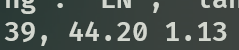

ThreadPoolExecutor, workers=8

![]()

ThreadPoolExecutor也用 39 个workers 跑过,时间变化不大。

不知道有没有python佬知道怎么解释。

(有兴趣自己试试的网友将loadtext那地方改成任何约40段英文的txt文件就可以了)